Sp(e)lunking with ChatGPT

The Elephant in the Room

By now, ChatGPT should be something you’ve heard about or are starting to hear about at your organization. I tend to approach new products surrounded by significant hype with a humble trepidation and reasonable apprehension. In recent months, ChatGPT and OpenAI have garnered a lot of news coverage and subsequent panic of an AI takeover that will result in all of us losing our jobs (😅). I don’t view it that way. I think this is a tool that we can use thoughtfully, to allow us to create new tools and solutions to problems that are more esoteric in nature or abstract. I wanted to start this post with two very important facts:

- I am not a programmer.

- 85% of the code used in this project was generated during my conversation with ChatGPT

I say these two things for transparency (and also so you won’t judge my awful coding style/habits).

The Project

To summarize my goal here, I wanted to know if it would be possible to create a tool that allows security professionals to easily create detection logic if they were only given a raw log payload. The tool had to be both intuitive and robust. Additionally, I wanted to eliminate as much human error as possible when writing something like Splunk SPL. With that in mind, I think I have started a seed of something that has the potential to empower analysts and detection-minded folks to build more detections, quicker.

Project Breakdown

Currently, the tool is a web-application built in Electron. This allows for it to function as both:

- a portable standalone app as a compiled cross-platform binary, or

- a locally hosted application that can interface with your existing stack.

Usage and Demo

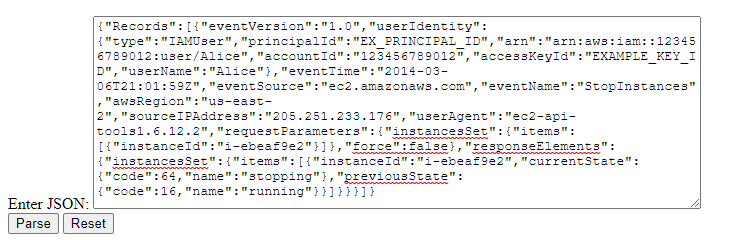

Input Raw JSON Payload

The first thing we need to do is input a raw JSON blob/log/payload into the input text box. Let’s imagine we have the following example JSON log from CloudTrail:

{"Records":[{"eventVersion":"1.0","userIdentity":{"type":"IAMUser","principalId":"EX_PRINCIPAL_ID","arn":"arn:aws:iam::123456789012:user/Alice","accountId":"123456789012","accessKeyId":"EXAMPLE_KEY_ID","userName":"Alice"},"eventTime":"2014-03-06T21:01:59Z","eventSource":"ec2.amazonaws.com","eventName":"StopInstances","awsRegion":"us-east-2","sourceIPAddress":"205.251.233.176","userAgent":"ec2-api-tools1.6.12.2","requestParameters":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2"}]},"force":false},"responseElements":{"instancesSet":{"items":[{"instanceId":"i-ebeaf9e2","currentState":{"code":64,"name":"stopping"},"previousState":{"code":16,"name":"running"}}]}}}]}

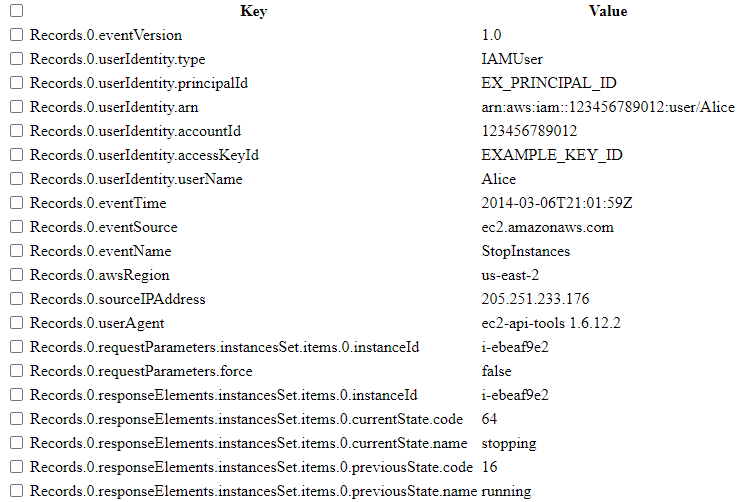

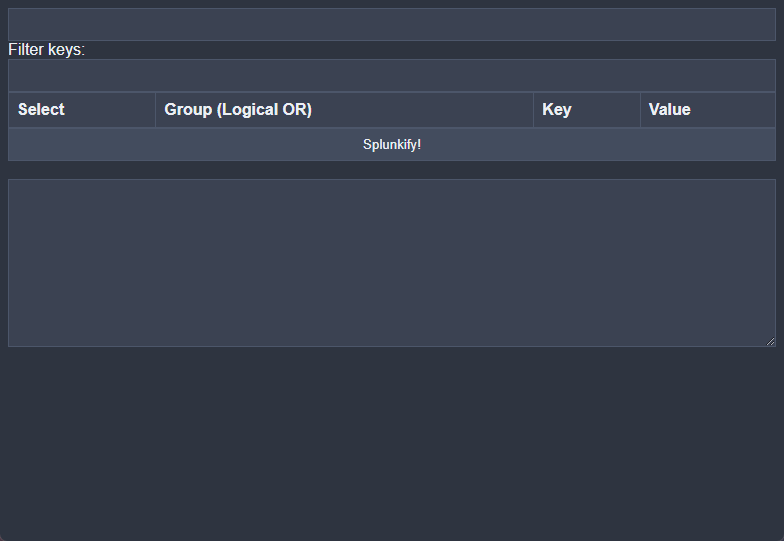

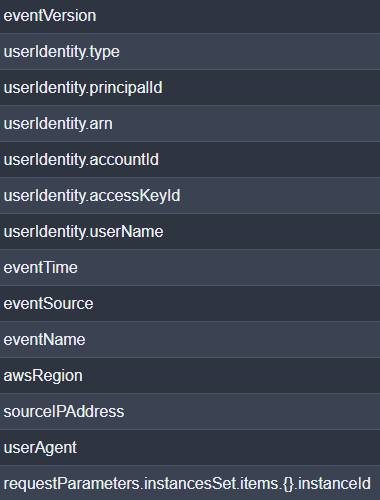

Parse the Blob

Once we input the JSON, the application will parse all fields (including handling nested fields appropriately). It then generates a key-value pair table, with selectable rows. This could allow us to select fields/field-value combinations that we may want to build a specific correlation search for, or allow us to group fields that we would want to potentially exclude.

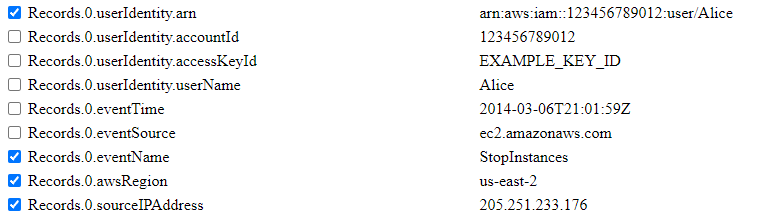

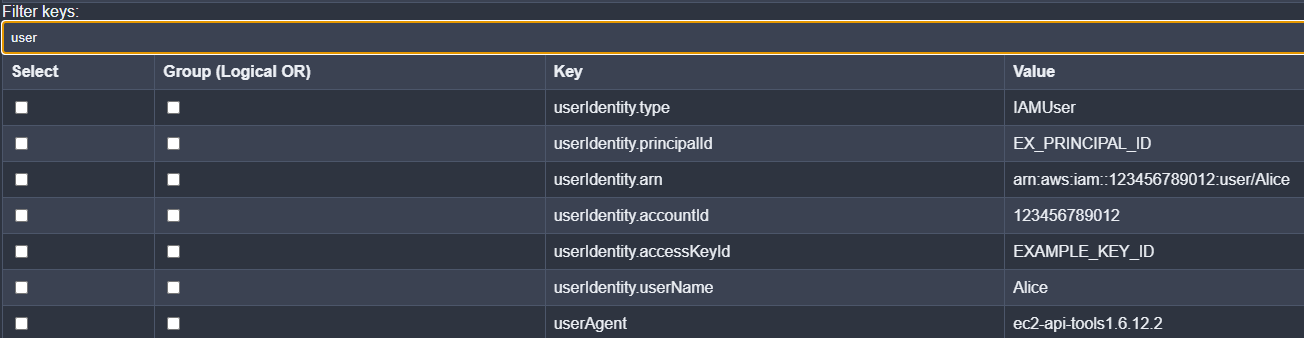

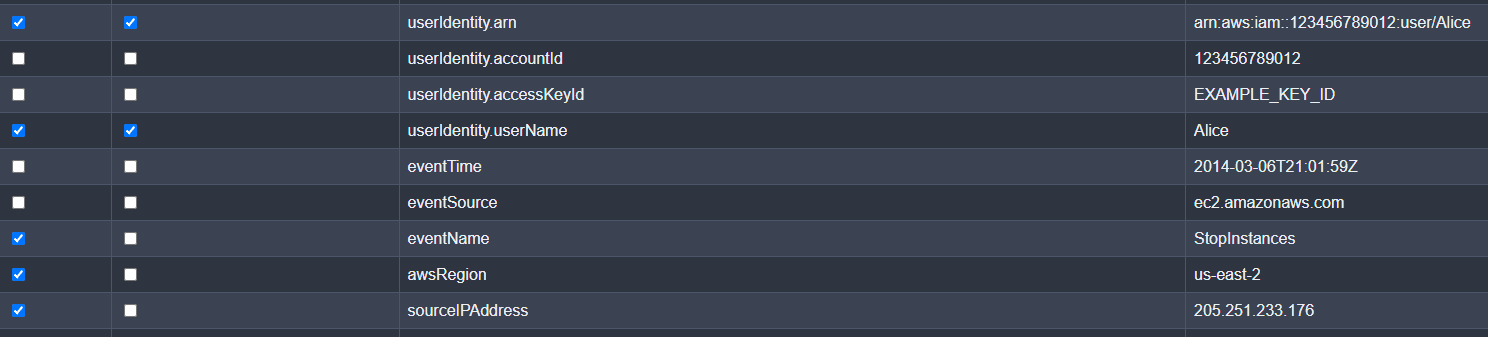

Select Key-Value Rows

To choose key-value pairs, we just check the relevant rows. In this scenario, let’s imagine that our AWS IAM user, arn:aws:iam::123456789012:user/Alice, is authorized to utilize StopInstances and that this activity is part of her job responsibilities as long as it is performed from our internal IP (205.251.233.176). For further scoping, let’s also put the restriction that Alice can only perform this API call on our us-east-2 instances. In this case, we just need to select the relevant rows:

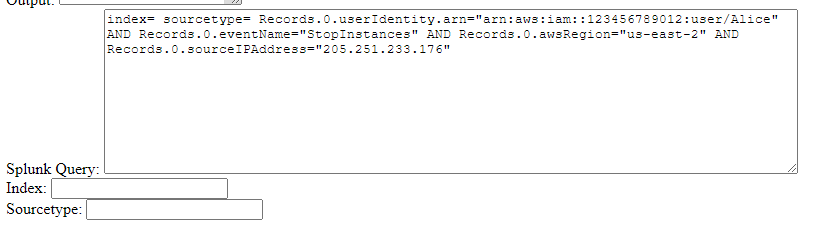

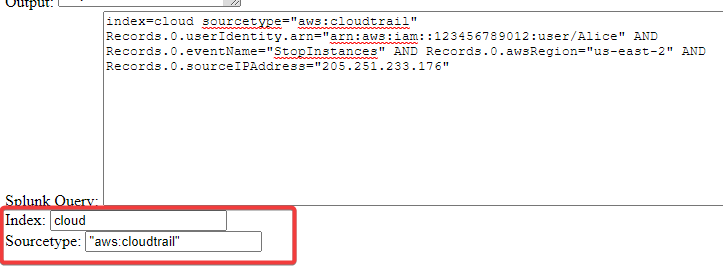

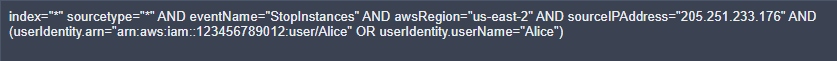

Click Button to Generate SPL Logic

Once we’ve selected the criteria we want to build logic for, we just need to click the button, and a Splunk SPL query will be generated for that combination of log artifacts.

Without specifying an index or sourcetype, the application will default to index=* sourcetype=*, but there is an option to specify an index and sourcetype:

Tailor the SPL as Needed

Right now, the generated SPL only assumes logical AND. But let’s imagine that we want to build a search for the user running StopInstances in regions they are not authorized in. Then we only need to make a single character adjustment:

index=cloud sourcetype="aws:cloudtrail"

Records.0.userIdentity.arn="arn:aws:iam::123456789012:user/Alice"

AND Records.0.eventName="StopInstances"

AND Records.0.awsRegion!="us-east-2"

AND Records.0.sourceIPAddress="205.251.233.176"

Granted this is just an example (and an admittedly bad one). But… I think it demonstrates the more important bit: the heavy lifting was basically done for us, and we know the field names and values are spelled correctly. We can delete or add to the query as needed, but with minimal effort. This type of workflow brings power to the analyst/engineer and their imagination. In the future, the logical operators and field grouping will be more modular but for now I’ll take needing to add 1 character over needing to write 222 characters of SPL every single day.

The Result

Within 10-30 seconds, we can go from pasting a raw log, to generating valid SPL for given artifacts. We don’t need to worry about syntax, spell checking field names/values or even having to parse through the log manually. For a version 1.0, I think this is pretty freaking cool. Oh, I’m also publicly releasing the source code for it which will be available here once I’ve cleaned up a few things.

Future Plans

I do plan on adding some more functionality to this project down the line but for now I just wanted to showcase a simple solution, built with a little creativity and the help of OpenAI.

TO-DO

- Additional Logical Operators (OR, NOT, CONTAINS)

- Something like

field=*string_match_wildcarding*

- Something like

- Logical Grouping (e.g.

| search (field1 AND field2) OR field3) - Automation of exclusions (

| search NOT) based on selections.

Can I help/Contribute?

Yes. I’m interested to see what other people can come up with, or how this can be applied at scale. If you have cool ideas, or if you want to contribute anything at all, please do. If you’re hesitant to get involved, just remember my first statement: I am not a programmer.

Updates

So rather than constantly make new posts about minor changes to this project, I am going to utilize this page to showcase some minor feature updates to the app as I go along.

Introducing version 1.1 (18 March 2023)

What’s New?

So the first thing you’ll probably notice is, we are now using the Nord color scheme for the application. Icy dark blues are pretty easy on the eyes, and people were complaining about their eyes melting.

You’ll also notice that we have a new column that is temporarily labeled Group (Logical OR). This selector allows you to group certain key-value pairs that will be wrapped in an OR statement enclosed with ().

Cleaner JSON parsing

Records.0.<field_name> is not how these logs are typically represented in a SIEM (thanks to rborum for pointing this out). To address this feedback, I added logic to ignore the outer-most JSON object and only parse the inside nested objects. In a CloudTrail log, for example, you’ll typically have unique logs per “record”. It didn’t really make sense for me to visualize it as Record.0 if there is no Record.1. Additionally, further nested fields that have empty or single object lists, will now represent as .{}. instead of .0.. This is because in my experience, it is how Splunk parses it out. Here’s a sample of what the output looks like now (compare with above):

Filtering on Field Names

We can now enter in text and string match on parsed field names. This will be particularly useful if the input JSON is extremely large, or we want to quickly find fields that our relevant to either an investigation or our engineering goal. Typing an input into the text box will dynamically update the table of key-value pairs.

Example Usage with Grouping

Here’s what it looks like now when we select fields and include them in a logical “OR” group:

*Notice the fields selected for grouping are enclosed within a set of parentheses and separated by an OR operator rather than an AND.